Model Policy for Artificial Intelligence (A.I.) in Local Government Agencies

Executive Summary

Model Artificial Intelligence (A.I.) Policy for Public Agencies

Artificial Intelligence (A.I.) is rapidly becoming part of everyday public operations—from automating routine workflows to analyzing complex data that informs public decisions. As these technologies mature, local governments face a new challenge: how to adopt A.I. responsibly, transparently, and in a way that strengthens public trust.

This Model A.I. Policy for Public Agencies provides a framework for doing exactly that. It sets forth a structured yet flexible approach to guide how agencies evaluate, procure, deploy, monitor, and retire A.I. systems. The policy reflects the best practices found in federal, state, and local examples—including guidance from the Office of Management and Budget’s Memorandum M-25-21, the National Institute of Standards and Technology’s A.I. Risk Management Framework, and dozens of city and state policies issued since 2023.

The Model Policy is written for mid-sized agencies—those large enough to manage formal governance, but without the specialized resources of major metropolitan or state organizations. From that baseline, it scales in both directions:

- Appendix A offers a streamlined version for smaller jurisdictions that lack dedicated technology staff or legal counsel.

- Appendix B expands the framework for enterprise-level or statewide agencies with complex data systems, specialized A.I. programs, and higher risk exposure.

- Appendix C includes a wealth of references and resources with A.I. policies and guidance from federal, state, and local governmental agencies.

The core sections of the policy establish practical, attainable expectations for all agencies:

- Every A.I. use must have a clearly identified program owner, executive sponsor, and designated A.I. Point of Contact responsible for its outcomes.

- Ethics and Equity – Systems must be fair, explainable, and aligned with the agency’s mission to serve the public.

- Risk-Based Controls – Oversight requirements scale with the potential impact of each system.

- Data Stewardship – Information must be lawful, secure, and appropriate for its intended use.

- Transparency – The public should know when A.I. influences decisions or interactions, and has a way to ask questions or appeal outcomes.

- Training and Competency – Staff must understand A.I.’s capabilities, limits, and ethical boundaries.

- Continuous Improvement – Agencies should monitor performance, address issues, and update their policies as technology and regulations evolve.

This Model Policy recognizes that smaller agencies often lack the manpower to sustain complex governance structures. For them, the framework prioritizes clear responsibility, simple documentation, and reliance on vendor attestations or cooperative resources. Larger agencies, meanwhile, can build on this foundation by adding independent audits, ethics boards, and public registries of A.I. use.

By publishing this model, the goal is not to prescribe a one-size-fits-all solution, but to help every public agency—large or small—adopt A.I. tools confidently and responsibly. The guiding principle is scalability: A.I. governance should always match an agency’s mission, capacity, and level of public impact.

The full policy that follows provides detailed procedures, role definitions, and compliance expectations to help agencies establish transparent, ethical, and sustainable A.I. programs.

[CITY/COUNTY/DISTRICT]

Artificial Intelligence (A.I.) Policy

1. Purpose, Scope, and Authority

1.1 Purpose

It is the policy of [Agency Name] to use Artificial Intelligence (A.I.) technologies in a manner that enhances public services, strengthens transparency, protects privacy, and upholds public trust. This policy establishes minimum standards and responsibilities for evaluating, procuring, developing, deploying, monitoring, and retiring A.I. systems within the agency.

1.2 Scope

This policy applies to all A.I. systems—whether developed internally or procured from third parties—that materially influence or automate any agency decision, communication, or service. It includes generative A.I. tools, data-analysis models, decision-support systems, and automated decision systems used by or on behalf of the agency, including those operated by contractors and vendors where outputs affect agency outcomes.

1.3 Authority

This policy is issued under the authority of [Statutory Citation or Administrative Code Reference] and aligns with applicable federal and state directives concerning responsible A.I. adoption, including the Office of Management and Budget’s Memorandum M-25-21 and the National Institute of Standards and Technology’s A.I. Risk Management Framework (NIST, 2023).

2. Definitions

Key terms are defined below for consistent interpretation.

- Artificial Intelligence (A.I.): A system that performs tasks that normally require human judgment—such as prediction, classification, generation, or decision support—using statistical, algorithmic, or machine-learning methods.

- Generative A.I.: A form of A.I. that produces text, images, code, or other content in response to prompts.

- Automated Decision System: Any A.I. application that influences or determines outcomes affecting individuals or public services.

- High-Impact A.I.: Systems whose errors or biases could materially affect rights, safety, or eligibility for government benefits.

- Data Steward: The staff member designated to ensure data quality, lawful collection, and privacy compliance.

- A.I. Point of Contact (AIPOC): The individual responsible for coordinating implementation of this policy and acting as liaison to executive management.

3. Governance and Accountability

3.1 A.I. Governance Team

The A.I. Governance Team (AIGT) oversees all agency activities involving A.I.

Composition: Executive Sponsor ([Executive Sponsor Title]), Chief Information Officer, Legal/Compliance Counsel, Program Lead, Procurement Manager, and Data Steward.

Quorum: Simple majority.

Frequency: At least quarterly, or as needed for new proposals.

3.2 Roles and Responsibilities

- Executive Sponsor: Approves this policy, resolves escalations, and reports annually to [Agency Governing Body].

- Program Lead: Owns operational A.I. use within their division and ensure compliance.

- Data Steward: Maintains data accuracy, lineage, and privacy compliance.

- Procurement Manager: Incorporates A.I. requirements into contracts, ensuring vendors provide transparency and audit rights.

- A.I. Point of Contact: Coordinates assessments, maintains inventory, and provides staff support.

3.3 Reporting

The AIGT submits an annual A.I. Use Report summarizing new systems, risk tiers, incidents, and lessons learned.

4. Ethical and Legal Principles

It is the policy of [Agency Name] that all A.I. systems shall adhere to the following principles:

- Fairness: Prevent bias and ensure equitable treatment of all individuals.

- Transparency: Inform staff and the public when A.I. assists decisions or communications.

- Accountability: Retain clear human oversight and responsibility.

- Privacy: Comply with all applicable data-protection and cybersecurity standards.

- Security: Safeguard models and data against misuse or unauthorized access.

- Purpose Limitation: Employ A.I. only to advance legitimate public purposes consistent with the agency's mission.

5. Risk Management Framework

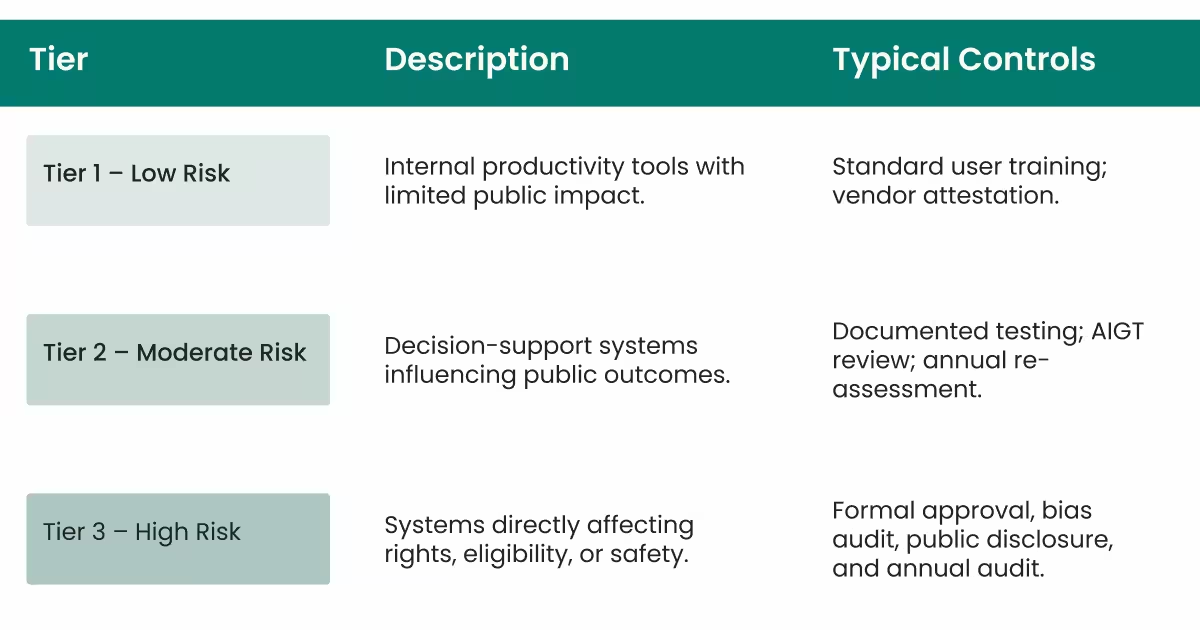

5.1 Tiered Risk Model

5.2 Assessment Criteria

Risk tier is determined by: data sensitivity, impact on individuals, system autonomy, reversibility, and scale.

5.3 Controls and Reviews

- Conduct pre-deployment risk assessment.

- Require bias and accuracy testing for Tier 2–3.

- Schedule monitoring intervals based on tier.

- Suspend or decommission systems if adequate controls cannot be maintained.

6. Data Governance and Quality

- Lawful Collection: Gather and process data consistent with [Relevant Privacy Law].

- Data Integrity: Verify accuracy and completeness prior to model training or use.

- Bias Checks: Evaluate datasets for representativeness.

- Access Controls: Restrict to authorized personnel.

- Retention and Disposal: Retain per records schedules; securely delete upon retirement.

- Third-Party Data: Vendors must disclose data provenance and maintain equivalent protections.

7. Lifecycle Management

7.1 Proposal and Approval

Each A.I. initiative must include:

- A Use Case Summary;

- Preliminary Risk Assessment;

- Legal and Privacy Review; and

- AIGT approval for Tier 2–3 systems.

7.2 Procurement and Contracting

Solicitations and contracts shall include provisions for:

- Transparency and documentation;

- Bias and security testing;

- Access for audit;

- Data ownership and exit clauses.

7.3 Development or Configuration

Ensure explainability, security, and equity considerations are integrated from the outset.

7.4 Testing and Validation

Conduct technical validation, fairness review, and explainability assessment before production use.

7.5 Deployment

Implement human-in-the-loop oversight for Tier 2–3 systems.

7.6 Monitoring and Incident Response

Monitor performance and user feedback; log issues. Report significant incidents to the AIGT within 24 hours.

7.7 Retirement

Document lessons learned; ensure data is archived or deleted appropriately; terminate vendor access.

8. Transparency, Reporting, and Auditability

- Internal A.I. Register: Maintain an inventory of all active A.I. systems.

- Public Disclosure: Publish summaries for Tier 2–3 systems, including purpose, datasets, oversight contact, and appeal process.

- User Notification: Inform individuals when interacting with automated systems.

- Audit Trails: Retain logs for three years or per records retention schedules.

- Periodic Review: Internal audit every two years; more frequently for high-impact systems.

9. Training and Competency

- Provide annual A.I. ethics and data-governance training for all staff using or supervising A.I. systems.

- Provide specialized training for technical staff on bias testing, model validation, and interpretability.

- Require vendor partners to certify comparable staff training.

10. Incident Response and Escalation

Trigger Events: data breaches, significant errors, biased findings, or harm complaints.

Process:

- Contain and investigate;

- Notify AIGT and [Agency Head Title];

- Implement corrective action;

- Document findings;

- Disclose publicly if impact meets Tier 3 criteria.

11. Stakeholder Engagement and Feedback

- Solicit community feedback for high-impact implementations.

- Provide contact information for citizens to submit questions or appeal A.I.-assisted decisions.

- Share lessons with peer agencies through cooperative networks.

12. Policy Maintenance

- Review Cycle: Every two years or following major statutory or technological change.

- Change Control: The AIGT documents revisions with version numbers and approval dates.

- Approval Path: AIGT → Executive Sponsor → [Agency Governing Body].

Adoption Clause (sample):

Adopted this [Day] of [Month, Year] by [Agency Name], pursuant to [Resolution or Administrative Order Number].

[Executive Sponsor Title]

[Agency Head or Governing Body Chair]

DOWNLOAD THE EDITABLE FILE HERE

APPENDIX A – Scaled Implementation for Small Agencies

(Lean Model)

Objective:

Preserve accountability and transparency without creating unsustainable administrative burdens.

- Appoint a single A.I. Point of Contact (AIPOC) to coordinate all compliance.

- Use a two-tier risk matrix (Low / High).

- Require vendor attestations instead of in-house audits.

- Document each system on a one-page summary form.

- Use shared regional or state services for audits and training.

- Publish a brief online statement of A.I. use and contact email.

- Conduct annual refresher training (~30 minutes).

- Report incidents directly to the [Agency Head Title] and seek technical support as needed.

APPENDIX B – Scaled Implementation for Large or Enterprise Agencies (Enhanced Model)

Objective: Deepen oversight and alignment with national frameworks.

- Establish a formal A.I. Ethics and Governance Board with bylaws and quorum rules.

- Appoint a Chief A.I. Officer (CAIO) and supporting compliance analysts.

- Apply a quantitative risk-scoring system aligned with NIST A.I. RMF.

- Conduct independent third-party audits annually for Tier 3 systems.

- Maintain a public A.I. registry and publish audit summaries.

- Partner with academic or civic panels for ethics reviews.

- Implement real-time monitoring dashboards for performance and drift detection.

- Participate in state or national A.I. communities of practice to share best practices.

APPENDIX C – References and Resources

Federal / National Frameworks

- National Institute of Standards and Technology. (2023). NIST Artificial Intelligence Risk Management Framework Version 1.0. https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf

- Office of Management and Budget. (2025, Feb). M-25-21: Accelerating Federal Use of A.I. through Innovation, Governance, and Public Trust. https://www.whitehouse.gov/wp-content/uploads/2025/02/M-25-21-Accelerating-Federal-Use-of-AI-through-Innovation-Governance-and-Public-Trust.pdf

State and Local Policies

- Commonwealth of Pennsylvania. (2025, Aug 27). Artificial Intelligence Policy. https://www.pa.gov/content/dam/copapwp-pagov/en/oa/documents/policies/it-policies/artificial%20intelligence%20policy.pdf

- Maine Office of Information Technology. (2025, Sept 30). Generative A.I. Policy. https://www.maine.gov/oit/sites/maine.gov.oit/files/inline-files/GenAIPolicy.pdf

- Kentucky Commonwealth Office of Technology. (2025, Oct 6). CIO-126: Artificial Intelligence Policy. https://technology.ky.gov/policies-and-procedures/PoliciesProcedures/CIO-126%20Artificial%20Intelligence%20Policy.pdf

- City of Seattle, WA. (2025, May 6). Artificial Intelligence Policy (POL-211). https://seattle.gov/documents/departments/tech/privacy/ai/artificial_intelligence_policy-pol211%20-%20signed.pdf

- City of San José, CA. (2025, Apr 24). AI Policy 1.7.12 and Generative A.I. Guidelines. https://mrsc.org/getmedia/d4dca306-4719-4c6f-8838-f488754f4b25/m58SJgaipol.pdf

- City of Richmond, VA. (2025, Jun 3). Administrative Regulation 2.13: Artificial Intelligence (AI) Policy. https://www.rva.gov/sites/default/files/2025-06/AR%202.13%20Artificial%20Intelligence%20(AI)%20Policy_FINAL_2025_06_03.pdf